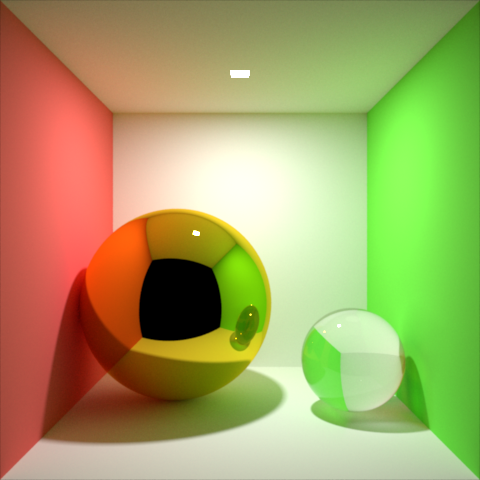

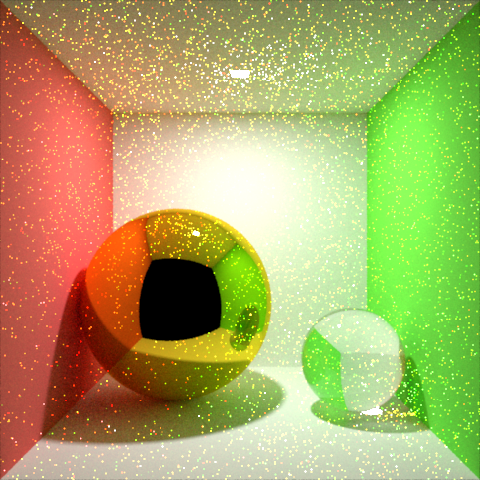

This year, I designed and created the cover image for the Penn Computer Graphics holiday card. I rendered the image in my renderer, Photorealizer:

The render features, among other things, global illumination accomplished with Monte Carlo path tracing, path-traced subsurface scattering, a 3D procedural wood texture, reflection from and transmission through rough surfaces, correct behavior at the glass–water (and other) interfaces, an HDR environment map, a spherical light, depth of field, anti-aliasing using a Gaussian reconstruction filter, and an S-shaped transfer curve.

Instead of using neutral white lights like I've often done in the past, I lit this scene with complimentary blue and yellow lights (glacier HDR environment map and small, bright spherical light respectively). This gives the image a more interesting and varied appearance, while keeping the light fairly neutral on the whole. When I started working on the lighting, I started with just the environment map and the image appeared far too blue. Instead of zeroing out the saturation of the environment map or adjusting the white balance of the final image, I decided to add the yellow spherical light to balance it out (inspired by the stage lighting course I took this past semester).

I spent some time tweaking the look of the snowflakes—the shape, the material, and the distribution. I ended up settling on the disc shape, which is actually a squished sphere (not a polygonal object). All of the snowflakes are instances of that same squished sphere. For the material, I made the snowflake diffusely reflect half of the incident light, and diffusely transmit the other half (in other words, a constant BSDF of 1/(2π)). This gives a soft, bright, translucent appearance, while still being very efficient to render (compared to subsurface scattering).

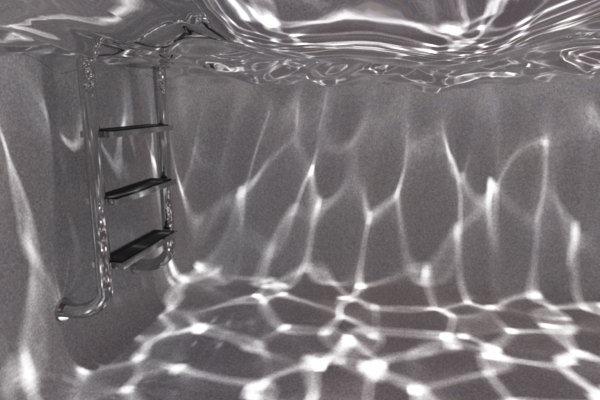

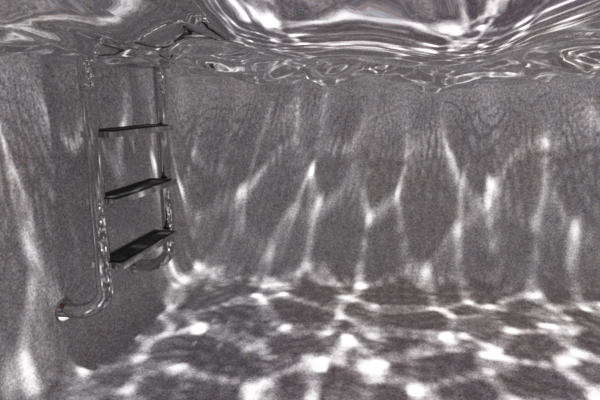

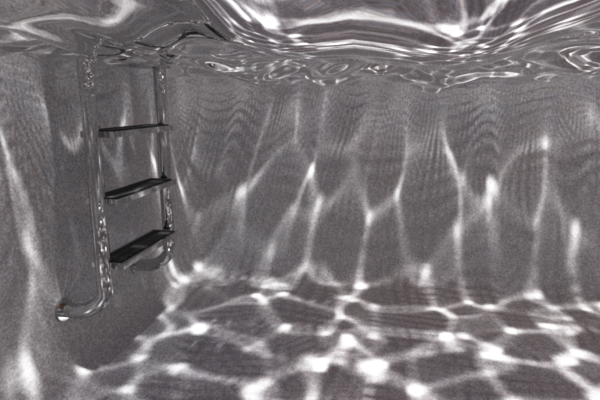

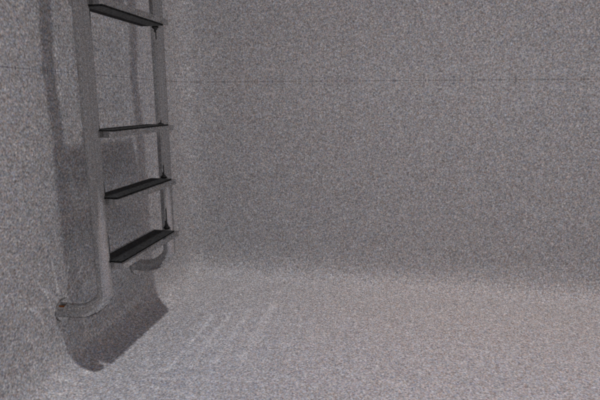

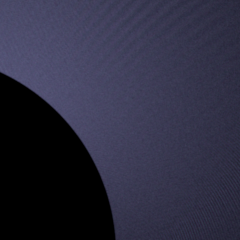

I made some different versions of the image to show how certain features affect the look of the final image:

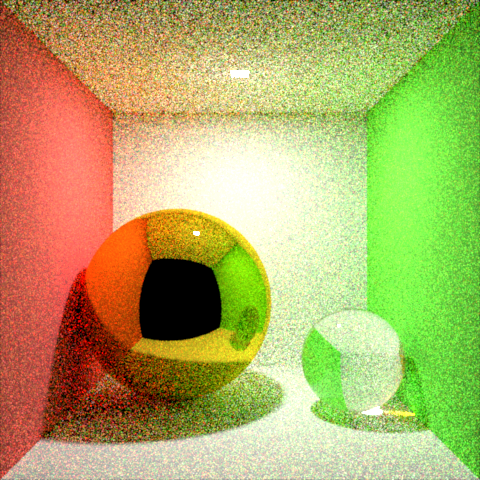

|

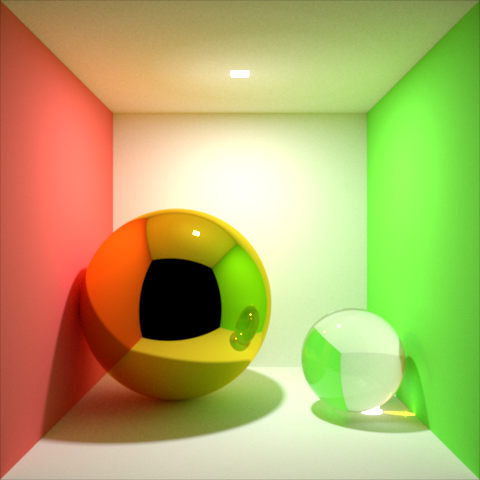

| Half-size version, for comparing to the images below. |

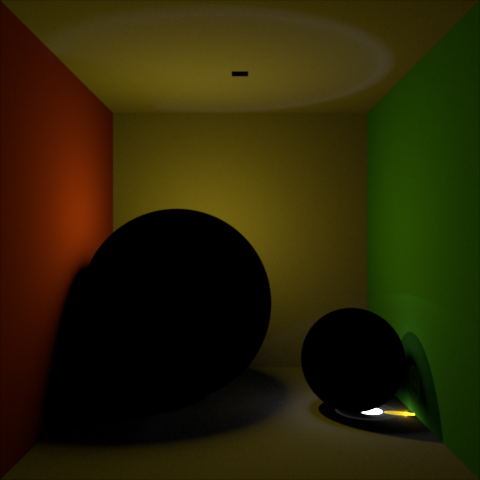

|

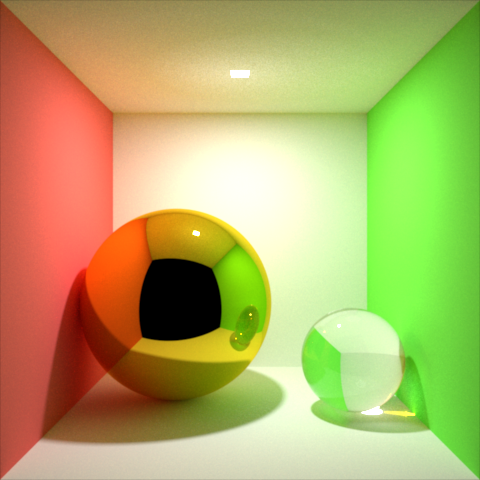

| White light version. |

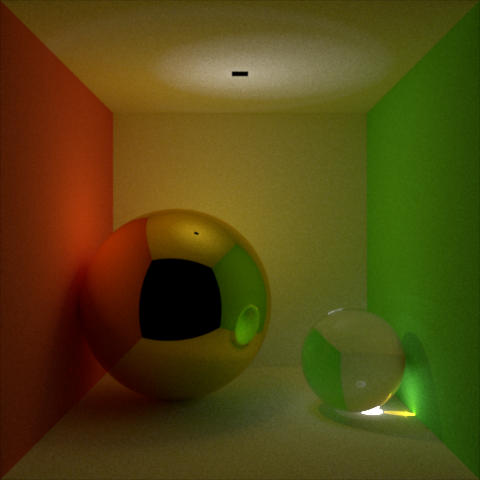

|

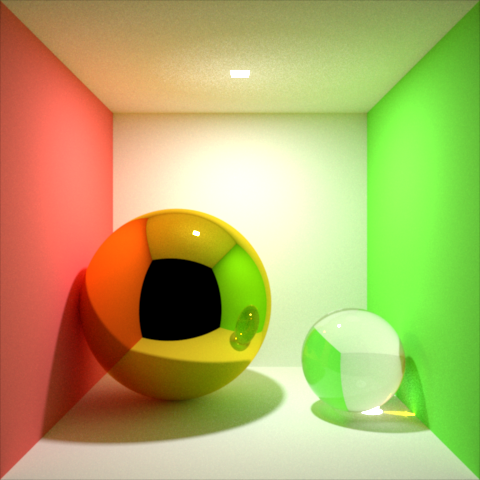

| Display-linear version. |

|

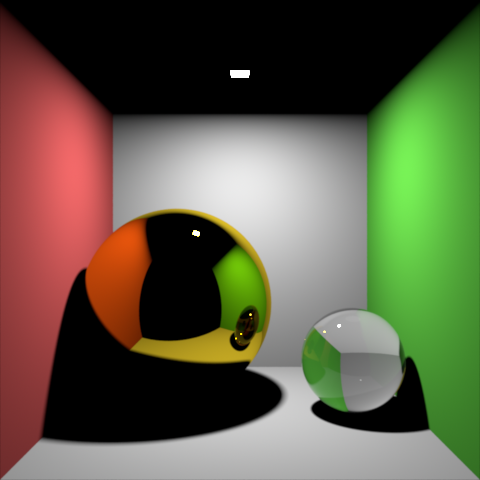

| Opaque perfectly diffuse version (statue, snowflakes, and tablecloth). |

For best results, the model needed to be very clean, exact, and physically accurate. I chose to model it using Houdini. I think Houdini is a very well-made piece of software. I really like its node paradigm, procedural nature, and clean user interface. I like having the ability to set parameters using precise numbers, or drive them with scripts, and having the ability to go back and modify parameters in any part of the network at any time.

In addition to using Houdini, I created the LOVE statue shape in Illustrator, and I procedurally added the snowflakes in Photorealizer.

Here are screenshots of the Houdini network and the resulting model:

|

| Houdini network. |

|

| Houdini model. |